Thanks that’s helpful and will work for my needs. I was also thinking that I’m ok with it being a separate action. I didn’t mean to suggest that you should change yours. I’m just not savvy enough to parse or split text and create my own action. Appreciate your wonderful work!

Hello! This action is absolutely incredible!

I just have 2 questions about it if anyone could help:

-

It seems that since this morning, it stopped working. Has anyone worked in a fix? I believe it’s ChatGPT’s API not working.

-

once introduced the API key, there’s no way to change it to another. It prompts it one single time pow device and that’s it. How can we make it prompt again?

Thanks!

You can reset the API key by forgetting the credentials in Preferences, then you will be prompted again when you run the Action.

I wondered if the ChatGPT API is not working - this action stopped working, but the website for ChatGPT works fine for me

Yes, same thing here. It was working fine from drafts for the lasts several weeks. Now today not working. I reloaded the install, obtained new key, went to settings and forgot previous chat credentials still doe not work.

here is the error message i received “Script step completed.

Chat failed: 429,

undefined

Script step completed.”

Still working for me today. I didn’t change anything. I suspect ChatGPT API will go down sometimes. Who knows? Keep trying.

You can reference the OpenAI API docs regarding error codes.

A 429 indicates you have either reached your request quota or are being rate limited for too many requests.

Still confused ! Can use chatgpt on my computer . But not drafts app? I guess chat wants to limit devlopers apps from overloading the main system ?

Thank you for your response

Have a great day!

To help diagnose the problem, I have improved the error handling in the action. Please update the action and try again. If an error occurs, you will see an alert with the raw response from the OpenAI API. If you require further assistance, please share the content here.

As for the case where the ChatGPT web works fine but the API doesn’t, I think it’s mostly because of the rate limit in the API, which is more strict than the web app. Your ISP or VPN provider may be responsible for it if they put multiple tenants (including you) on a single public IP. My solution is to deploy a reverse proxy for the OpenAI API on Cloudflare or Vercel using tools like egoist/openai-proxy, but this may require some developing skills and is only helpful for IP rate limits.

Thanks for looking into this. With the new version, I get:

Chat failed with HTTP status 429.

Body:

{

“error”: {

“message”: “You exceeded your current quota, please check your plan and billing details.”,

“type”: “insufficient_quota”,

“param”: null,

“code”: null

}

}

ChatGPT must have made a recent change, I’m on the free plan, but hadn’t used the Drafts action for several weeks. Web version works fine. Too bad, it is real handy to get the results directly into a draft.

You should be able to check on your status on your API usage page at OpenAI. They provide pretty detailed information. Have you set up your billing information and usage quotas for your account? The API is different from using the website and requires you setup that information for your account.

The API returns the number of tokens used by a request, and so it might be worth adding a message to each run (app.displayInfoMessage()) to help users understand how many tokens are being used, or (/and) even the financial cost for each API call. That would give users a better visualisation of what they are using up with each request.

Unfortunately, I can’t see any way in the API to query how many tokens a user has remaining. That would be even more useful to be able to show a user.

Unless I am missing something, that lack of being able to query the quota before making other calls seems to be a curious omission by OpenAI. I know limits can be set, but maybe not having that immediate visibility leads to greater use as people are not really keeping track of how much they are spending?

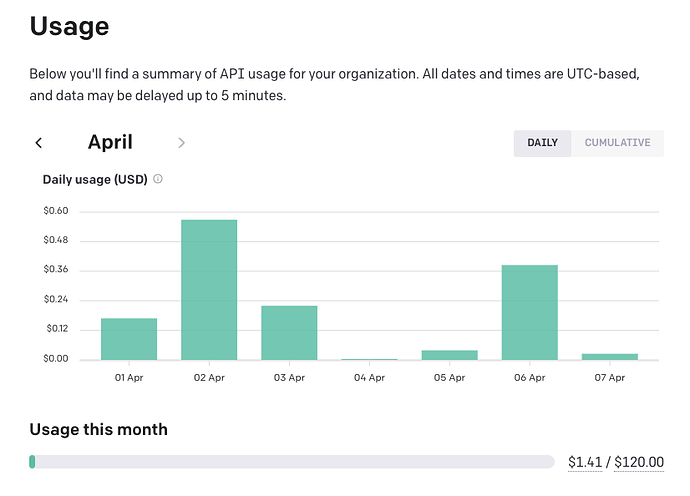

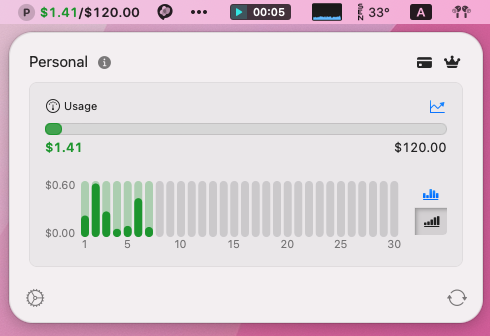

Sharing my API usage as an example since it’s not confidential (is it?)

To @sylumer

Unfortunately, I can’t see any way in the API to query how many tokens a user has remaining. That would be even more useful to be able to show a user.

There’s no “remaining tokens”, OpenAI takes a “Pay as you go” pricing model, the $120 limit is the maximum usage that OpenAI provides to the account.

The idea of adding token usage to each run is interesting, but this would be very hard to implement properly using a plain-text-based interface. I think the price is fairly cheap compared with ChatGPT Plus subscription, even for gpt-4 model, so I just occasionally check the usage page to ensure it’s within a normal range.

If you really want to keep an eye on the usage more often, I recommend using Waige, which is a menubar app that shows the API usage and costs.

This returns an error telling me I’m over quota, but I know I’m not (other apps still working on same API).

Unless I misunderstood something in the API documentation, I don’t think it wold be “very hard”.

According to the docs, the API call returns number of tokens used. Here is one of their example API responses.

{

'id': 'chatcmpl-6p9XYPYSTTRi0xEviKjjilqrWU2Ve',

'object': 'chat.completion',

'created': 1677649420,

'model': 'gpt-3.5-turbo',

'usage': {'prompt_tokens': 56, 'completion_tokens': 31, 'total_tokens': 87},

'choices': [

{

'message': {

'role': 'assistant',

'content': 'The 2020 World Series was played in Arlington, Texas at the Globe Life Field, which was the new home stadium for the Texas Rangers.'},

'finish_reason': 'stop',

'index': 0

}

]

}

Note usage > total_tokens.

Using the function I noted earlier you could display that value at the end of the action run to say “Query used X tokens”, substituting in the total token value for X. It would just appear briefly as an in app message. You could optionally write it to the action log for historical tracking.

Since the pricing for the model is known…

gpt-3.5-turbo

Usage

$0.002 / 1K tokens

… a query cost calculation can be calculated from a known token use.

I have never used Chat GPT (directly at least), so I have no real use feel for the amount of tokens and costs a typical user might incur, but it might well be that cost-wise anything less than 1 cent you might abbreviated (e.g “Cost: < 1 cent”). But it may be that everything in practice costs less than 1 cent and so including a financial figure would never really make sense.

What error number is it, and are you definitely using exactly the same API key for all of the apps?

I’ve submitted a new version to fix a problem that I put my own API endpoint in the action. You may be requesting OpenAI via my reverse proxy deployed on Cloudflare Workers, though it should not cause the quota error, I recommend updating the action in case it’s relevant.

For those who may be concerned @george_tenedios @BobinNV @avatar

Thanks for the great explanation of the API data structure. However, what I was trying to say was that displaying the token usage/costs in the draft and making it less intrusive is kind of hard. Sorry if I didn’t make it clear. It’s a design problem rather than a technical one.

I’m thinking about adding a new section called <!-- block meta -->, where we can add parameters like temperature, frequency_penalty, and the accumulated token usages in the current draft (analytics sounds good). This may be better than appending the usage at the end or displaying an alert every time after running.

It is only a suggestion, and for anyone who wants it, it should be straight forward to add it in. Many people have the All Notifications set for their actions, which uses the same brief message approach as the function I noted, so I while I agree it is an intrusion, I suspect the reality is minimal. If you change your mind, you at some point in the future, you could even incorporate an option to show or not show such information.